Chapter 1: Why Is AI even Writing Vulnerable Code?

1.1 AI Training Data: Garbage in, garbage out

AI coding assistants learn from vast public code repositories (GitHub, StackOverflow, etc.), ingesting both high-quality code and insecure snippets. Because they absorb common patterns indiscriminately, these models sometimes replicate unsafe practices found in their training data. In short, an AI can produce code with the same bugs and security flaws humans have made in the past. True to the motto, garbage in, garbage out.

1.2 Functionality First, Security Second

By design, generative AI focuses on delivering working code that satisfies the prompt, often without considering security unless explicitly told. The result is “vibe coding” rapidly scaffolding an app that works but may lack hardened defenses. This speed-over-safety approach means AI suggestions tend to use default configurations or simplistic solutions, which can include insecure defaults and vulnerabilities if not reviewed.

1.3 Real-World Impacts and Prevalence of AI-Induced Flaws

AI’s blind spots have tangible consequences. Recent research shows nearly 52% of AI-generated code contains an OWASP Top 10 security vulnerability. For example, one startup founder saw their production database accidentally wiped out after executing a single AI-recommended command in a live environment.

Common issues introduced by AI-coded apps include missing access controls, hard-coded secrets, unsanitized inputs, and other “sloppy” patterns, foreshadowing the vulnerabilities we’ll address in this course.

Chapter 2: Common Web Vulnerabilities (OWASP Top 10 Overview)

2.1 Understanding the OWASP Top 10

Before we dive into AI specific vulnerabilities, it is helpful to explain web application vulnerabilities in general. As said in the beginning, AI learns from scraped Human written code, so AI code inherently reproduces the same mistakes. The OWASP Top 10 is a renowned list of the most critical web application security risks, maintained by security experts and updated periodically. It just got a new update in 2025 after being relatively stable since 2021. With the rise of AI vibe coding and LLM assisted programming, the Top 10 had to adapt to the newly opened amount of vulnerable software being created. It provides a high-level overview of common vulnerability categories that developers should be aware of. We use it as a roadmap for this course because it covers the major types of weaknesses that attackers exploit in web apps.

2.2 The Ten Vulnerability Categories at a Glance

Below are the OWASP Top 10 (2025) categories, each representing a broad class of vulnerabilities:

1. Broken Access Control:

Flaws in enforcing user permissions, allowing unauthorized actions.

A user can change the URL from:

/dashboard?user=123 to

/dashboard?user=124 and view another customer’s generated bedtime stories because the app trusts the query param and never checks owner authorization.

2. Security Misconfiguration

Insecure settings or default configs in software or cloud services. Your SaaS ships its admin panel publicly at:

/super-secret-admin-panel running on Next.js in “dev mode” with DEBUG=true, exposing full error stacks and API routes, leaking your internal Supabase, Stripe, Resend, Mailgun or whatever API keys.

3. Software Supply Chain Failures (Vulnerable Components)

Using libraries or packages with known flaws or malware. The app uses an outdated npm package of:

jsonwebtoken 8.5.1 which contains a known signature-verification bypass, letting attackers forge session tokens without the private key.

4. Cryptographic Failures

Weak or missing encryption, poor key management leading to data exposure. User API tokens or Passwords are stored in your database as plain text, never encrypted with something like AES‑256, so anyone who leaks or accesses the DB immediately gains full account takeover ability.

5. Injection Attacks

Unsanitized input tricking an application into executing unintended commands or queries. Classic one: Your search box does db.users.find({ name: userInput }) without sanitizing. User types

{"$ne": null} and suddenly sees every user. Or worse,

Indiana Jones'; DROP TABLE customers;-- executes because the app never sanitizes or parameterizes queries. This would delete your entire database btw.

6. Insecure Design

Fundamental design flaws in the system architecture or logic (lack of threat modeling). You let users upload avatars with no size or rate limit ➡️ someone uploads 10 GB files and kills your server. Or you allow unlimited password reset emails ➡️ someone scripts it and locks everyone out.

7. Authentication Failures

Incorrect or absent user identity verification (e.g. broken login mechanisms).

You built login with AI and it forgot rate-limiting ➡️someone brute-forces 1 million passwords in 10 minutes. Or the “forgot password” link is predictable (reset?token=123) so anyone can guess it.

8. Software/Data Integrity Failures

Failure to verify software and data integrity (e.g. trusting untrusted code, deserialization issues).

Your app auto-updates user profiles from untrusted JSON (deserialization). Attacker sends {"isAdmin": true} and becomes admin. Or you use an insecure library that lets attackers inject code during deserialization.

9. Logging & Monitoring Failures

Inadequate logging or alerting, leaving attacks undetected. Imagine someone is poking around and nothing is logged. Someone tries to log in as admin 5000 times and you have no idea. Or you log everything… to the browser console in production (leaking secrets & configs).

10. Mishandling of Exceptions

Poor error handling and unintended behaviors when the system faces unexpected conditions. Your app crashes and shows the full error stack with database passwords when something unexpected happens. Or it silently fails and lets the user continue with half-saved data (leading to corrupted accounts).

2.3 Why “Vibe” Coded Apps Are Especially At Risk

AI-generated apps often unintentionally check off several of the above risk categories. Studies show that input validation omissions (leading to injection flaws) are the most frequent security issue in AI-written code. Similarly, prompts that don’t specify security can result in no authentication or access control, meaning anyone might use sensitive features freely. “Vibe” coding also tends to introduce hard-coded secrets (API keys in code) and rely on many external packages, even outdated or non-existent ones. These habits increase exposure to vulnerabilities like Broken Access Control, Injection, and Software Supply Chain attacks. In the following chapters, we’ll delve into each category, explaining how these weaknesses occur (often in AI-generated code) and how to fix them.

Chapter 3: Securing AI-Generated Code (OWASP Top 10 Mitigations)

Now that we’ve seen why AI can produce insecure code and what the common vulnerabilities are, let’s dive deeper into each OWASP Top 10 category. For each vulnerability type, we’ll explain what it is in more detail, show how “vibe coding” (AI-generated code) might accidentally introduce it, and most importantly, outline how to avoid it. The goal is to turn rapid AI-produced prototypes into secure applications.

3.1 Broken Access Control: Leaving the Door Wide Open**

**A security guard asleep on duty, a perfect metaphor for Broken Access Control, where no one’s actively enforcing who gets in.

Broken Access Control flaws occur when an application doesn’t properly enforce who is allowed to do what. In other words, the “guards” (authorization checks) are off duty or missing. This can lead to users viewing or modifying data they shouldn’t. For example, if user A can simply change a URL or request parameter to see user B’s account (?user=124 instead of ?user=123), that’s a broken access control. The app isn’t verifying ownership and just lets everyone in. It’s like leaving the VIP section unroped, anyone can stroll right in. (owasp.org). Unfortunately, AI-generated code often forgets these guardrails. By default, an AI might eagerly create a working API or UI without adding permission checks, because unless you specifically asked for security, it doesn’t “know” the requirement (medium.com). One study noted that missing or weak access controls are among the most frequent issues in vibe-coded apps (csoonline.com), essentially, the AI leaves the door wide open.

To prevent Broken Access Control, you need to play bouncer in your app. Always enforce user identity and permissions on sensitive operations. Here’s how to keep the door locked to intruders:* Ask for Authz Logic: When prompting your AI assistant, explicitly mention access control. For example: “Ensure that only the record owner or an admin can access this endpoint.” If you don’t, the AI might not include any check at all (it’s just trying to fulfill the functional request). Remember, the AI doesn’t inherently know which parts of your app should be private (medium.com).

-

Deny by Default: Follow the principle of least privilege, by default, deny access to everything, and then allow only what’s necessary (owasp.org). In practice, this means your AI-generated code should include checks like “if currentUser != resource.owner then reject” before allowing an action. If the AI’s output doesn’t have that, you must add it.

-

Reuse Proven Solutions: Ideally, use frameworks or libraries for authorization. For instance, in a web app, use built-in authentication/authorization middleware (Django’s permissions, Spring Security, etc.) rather than rolling your own logic. If you prompt the AI to use such a framework (e.g., “Use Django’s @login_required decorator and object-level permissions”), you’re less likely to get a naive “sleeping guard” implementation.

-

Test for Lock Picking: Even after implementation, test by trying to access data you shouldn’t (just like an attacker would). Can user A access user B’s data by changing an ID or URL? If yes, then the lock is still broken. Keep iterating until the answer is no.Stressing these points in your prompts and reviewing the AI’s code for authorization checks will help ensure that each user only accesses what they’re meant to. You want your app to be more like a nightclub with a strict list, not a free-for-all house party.

3.2 Security Misconfiguration: Default Settings Strike Again

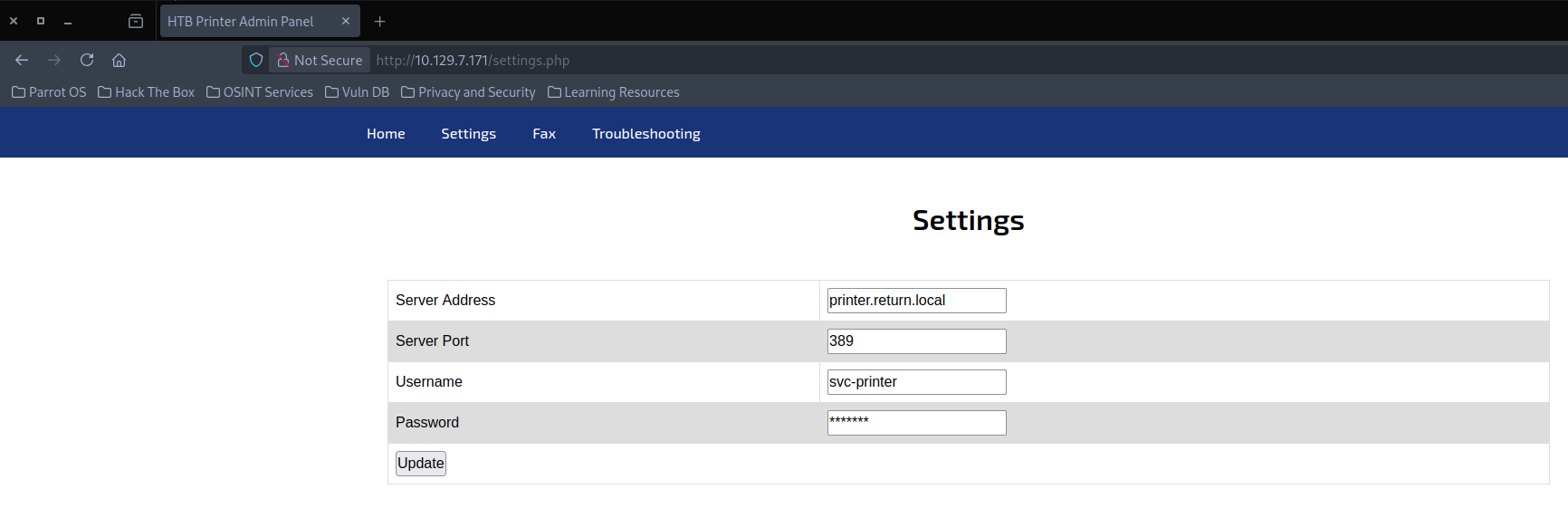

Every software comes with knobs and switches configurations that, if set wrong, can introduce security holes. Security Misconfiguration is when those knobs are left in insecure positions (often the default positions). Think of an app running in debug mode on production, spewing sensitive info, or an admin panel left open with the username “admin” and password “admin”. In vibe-coded projects, this happens a lot because AI typically uses whatever default settings or example code it found, which might not be production-safe. Unless instructed otherwise, an AI might configure a new server or service using sample credentials, default ports, and enable every feature for “convenience” (owasp.org & owasp.org). This is the digital equivalent of leaving the keys under the doormat, convenient, but asking for trouble.

For example, you might deploy an AI-generated Node.js app and realize it’s serving the default “Welcome to Express” page on an open port, with no security headers. Or perhaps your AI-coded cloud setup leaves storage buckets world-readable. A notorious real-world case: databases left open with default passwords have led to massive data leaks, all due to misconfiguration. AI won’t inherently remember to turn off sample accounts or change defaults unless you tell it to. In one anecdote, an AI-created app shipped with debugging enabled (DEBUG=true) and a publicly accessible admin route, exposing internal APIs and secrets to anyone who stumbled upon it.

To avoid these misconfiguration pitfalls, you need to harden your defaults. Here are some steps to take (and to prompt your AI for):

-

Explicitly Disable Debug/Dev Modes: Never run in dev mode in production. If using an AI to generate framework code, add a prompt like “configure for production (disable debug, use secure settings)”. Double-check that flags like

DEBUGor verbose error messages are off in the final config. For instance, an AI might leave verbose error output on, which can reveal stack traces or config files to users. Make sure those are off (or use a production config file). -

Change Default Credentials and Keys: Many out-of-the-box setups come with known defaults (e.g., admin/admin login, or a default encryption key). Always change or randomize these. When your AI sets up something like an admin user, prompt it to allow setting a secure password or explicitly mention “no hardcoded default password.” If the AI created one for convenience, remove or change it immediately.

-

Minimize Unnecessary Features: Security misconfigurations often arise from “feature bloat.” The AI might enable services or add dependencies you don’t actually need (simply because it saw them in examples). Review and disable any feature or plugin that isn’t required. For example, if an AI-generated config opens up extra ports or enables a sample API, strip those out. Less running parts means fewer things that can be mis-set.

-

Consistent Environments: Use infrastructure-as-code or scripts to set up environments securely and consistently. For instance, deploy with a known-good config template. You can even prompt the AI: “Provide a hardened Nginx config for production”. Ensure that security settings (allowed hosts, CORS rules, security headers, etc.) are appropriately set across dev, test, and prod. A repeatable hardening process is key (owasp.org & owasp.org).

-

Stay Updated on Patches and Settings: Misconfiguration can also mean not keeping up with security updates or recommended settings. The AI won’t magically know about a new patch or a secure setting added in version X.Y. So keep an eye on documentation or security guides for the stack you’re using. (This one is on you, the developer, your AI assistant won’t read OWASP updates unless you feed them in.)

By actively guiding the AI toward secure configurations and double-checking after, you can avoid the classic “it worked with defaults, so I left it that way” trap. Remember, default often means “one size fits all,” which in security usually means “one size fits nobody particularly well.” Secure configuration is all about tailoring those settings to lock down your application.

3.3 Software Supply Chain Failures: One Bad Package Spoils the Bunch

Modern apps rely on tons of third-party code, libraries, frameworks, packages from npm, PyPI, etc. Software Supply Chain Failures refer to vulnerabilities introduced by those external components. This could be using an outdated library with a known security hole, or outright installing a malicious package. Here’s the kicker: AI coding assistants often suggest libraries (by name) as part of their solutions, and they might not choose wisely. They’re likely to pick whatever was common in training data, regardless of whether that version is now obsolete or if that package has been hijacked by attackers.

Consider this scenario: You ask an AI to hash passwords, and it suggests installing a package named ‘crypto-secure-hash‘. Looks legit, so you ‘npm install‘ it. Unknown to you, that package is something the AI hallucinated, it didn’t exist until an attacker saw people (and AIs) attempting to fetch it and quickly published a malicious version of that name. Suddenly, you’ve pulled malware into your project without knowing (medium.com & medium.com). This isn’t hypothetical, researchers found AI will “invent” nonexistent packages ~20% of the time, and attackers are actively preloading those names with exploits. This new attack vector even has a name: “slopsquatting”, like typosquatting, but you don’t have to mistype anything, you just trust an AI suggestion.

Even when the package name is real, the AI might pull in a vulnerable version. It has seen code from years past, so unless the prompt or context says “latest version,” it could regurgitate an old example. For instance, you might get ‘jsonwebtoken @8.5.1‘ recommended, not realizing that version has a known signature-bypass flaw. The AI doesn’t read CVE feeds, it might unknowingly introduce a library that attackers already know how to exploit. In the worst case, your vibe-coded app becomes vulnerable the moment you install its dependencies.**oded app becomes vulnerable the moment you install its dependencies.

How do we mitigate supply chain risks in AI-generated code? A few strategies:

-

Verify and Vet Dependencies: Don’t blindly install packages the AI suggests. Take a moment to check: Is the package legit and maintained? What version is recommended? If the AI gave a version number, cross-check it against the package’s changelog or advisory database. If it didn’t, always install the latest secure version (and consider adding ‘npm audit‘ or equivalent to catch known vuln). You might prompt the AI, “Use the latest stable version of X library” to nudge it in the right direction.

-

Watch Out for Hallucinated Packages: If an AI recommends a library you’ve never heard of and it looks oddly generic or off-brand (‘crypto-secure-hash‘ does sound a bit on the nose, right?), treat it as suspect. Search for it online. If it doesn’t exist or appeared only recently with few stars/downloads, do not use it. As a rule, stick to popular, well-known libraries for critical functionality. You can even instruct: “Only use standard libraries or widely-used packages for XYZ.”

-

Keep Dependencies Updated: This is classic advice but bears repeating. After using AI to scaffold your app, make sure you enable automated dependency updates or at least regularly update your packages to pull in security fixes. The AI’s snapshot of the world is frozen at training time; it won’t magically update the code for you next year when a new vuln is found. Use tools like Dependabot, npm audit, pip audit, etc., to stay on top of this.

-

Manual Code Review for Introduced Libraries: If your AI adds a dependency, take a quick look at that dependency’s own docs or code (if feasible). The AI might be including it to solve a problem, but ensure it’s not doing something sketchy. For example, is that package requesting excessive permissions or making outbound network calls unexpectedly? A cursory review can reveal if you’ve pulled in a trojan horse.

-

Lock and Verify: Use package locks (‘package-lock.json‘, ‘Pipfile.lock‘, etc.) and consider verifying hashes or signatures of dependencies if the ecosystem supports it. This ensures you install exactly what you intend, and not something altered. Some package managers support integrity verification. It might be overkill for small projects, but for anything security-sensitive, it’s worth it.

In short, treat AI-suggested dependencies with zero trust until proven otherwise. The convenience of “just add this library” can’t trump the due diligence of checking what that library is. The AI is great at suggesting how to solve a problem, but you must decide if a third-party solution is trustworthy (owasp.org). By auditing your supply chain, you prevent that one bad package from spoiling your whole application.**

3.4 Cryptographic Failures: The Plaintext Peril

Cryptographic failures involve anything related to data protection. Think of weak encryption, or storing secrets and passwords in plain text. In vibe-coded apps, these failures are alarmingly common, as AI often follows the path of least resistance (or the most StackOverflow posts). For example, an AI might suggest storing user passwords directly, or using an outdated hash like MD5 because “that’s what it saw in many code examples.” In fact, researchers have noted that AI assistants tend to recommend outdated cryptography practices; they’ll confidently use MD5 or SHA1 for hashing passwords simply because those appeared frequently in training data. What’s worse, if not explicitly prompted about security, an AI might skip encryption or hashing entirely, leaving sensitive data in plain sight. It’s the Plaintext Peril: your users’ passwords, API tokens, personal info, all sitting unencrypted in a database or config file, one breach away from being exposed.

Another facet of this is hard-coded secrets. AI models frequently bake API keys or credentials right into the code they generate because, well, they’ve seen lots of public code where developers mistakenly did the same. The model doesn’t know it’s a bad practice; it’s just mimicking what’s common. As a result, you might get a perfectly working snippet that connects to, say, a cloud service, but with your secret token plainly visible in a string. If you launch that code, anyone inspecting the app (or your repo) can find those keys and misuse them. According to one security expert, hardcoded secrets are one of the biggest red flags in AI-generated apps. They show up often, along with other “sloppy behaviors” (csoonline.com).

So how do we banish the Plaintext Peril and ensure strong cryptography in our AI-assisted code? Here are the must-dos:

-

Never Store Sensitive Data Unencrypted: This is non-negotiable. Passwords must be hashed (with a modern, strong hashing algorithm and proper salting). Prompt the AI to do this: “Store passwords using bcrypt with a random salt” or “Use Argon2 for hashing passwords.” If the AI suggests or writes code that just saves the raw password or uses something weak like ‘hash(password, ‚md5‘)’, that’s a huge red flag, intervene immediately. In one analysis, AI often suggested either outdated hashing or none at all; you must ensure it uses well-vetted methods like BCrypt or Argon2.

-

Externalize Secrets: No API keys, tokens, or credentials should be directly in code. Instead, use environment variables or a secure secrets vault. You can guide the AI by saying something like, “Load the API key from an environment variable”. If the code it gives you has

API_KEY = "ABC123", move that out to a config file or env var before using it. As a guideline from the Open Source Security Foundation puts it: every AI session should get the instruction “never include secrets in code; use env variables”. -

Use Up-to-date Cryptography Libraries: Don’t reinvent crypto and don’t use low-level functions incorrectly. If your AI coding assistant starts writing a custom encryption function or using the language’s built-in crypto in an insecure way (e.g., using AES in ECB mode or a constant IV it found somewhere), stop and switch to a reputable library. Prompt example: “Use the ‘crypto’ library’s recommended secure functions” or “Use libsodium (or PyCa’s Cryptography, etc.) to encrypt this data”. This way, you leverage well-tested implementations. Also ensure the AI uses current best practices, for instance, if you’re in Java, the AI should pick PBKDF2 or BCrypt for password hashing, not a single round of SHA-256.

-

Enforce Transport Security: Cryptographic failures aren’t just about storage, they include data in transit. An AI might set up an API over HTTP (because it’s simpler in examples) unless told to use HTTPS. Always deploy with TLS (HTTPS) and if you’re generating code or config with AI, mention “with SSL/TLS”. Also consider things like setting secure cookies (with the Secure and HttpOnly flags), AI might not remember those on its own.

-

Review and Refine: After generation, review the code for any mentions of cryptographic algorithms or keys. Are they up to modern standards? For example, if you see

RSAwith 1024-bit keys or use of an old cipher, that’s outdated. If you see hardcoded passwords for a database connection, that’s a problem. You may need to explicitly re-prompt the AI: “Use a config file or env var for DB password and document how to set it.” Essentially, treat AI-generated code as untrusted until you verify it meets security basics. This includes verifying that secrets are not exposed and cryptography is properly handled.

In summary, secure the secrets and use proven crypto. AI can save us time writing boilerplate, but it’s not a cryptography expert. You, as the developer, have to ensure that all sensitive data remains secret, both at rest and in motion. With careful prompting and thorough review, you can prevent your AI assistant from inadvertently inviting attackers to a “plaintext party.”**

3.5 Injection Attacks: Little Bobby Tables Strikes Again

The classic Injection Attacks, where an application takes some untrusted input and inadvertently treats it as code or a command. This category includes SQL injection, OS command injection, and cross-site scripting (XSS), among others. It’s every developer’s nightmare from Web Security 101, and unfortunately, AI-generated code is particularly prone to it. Why? Because unless explicitly told to sanitize or use safe APIs, the AI will often build queries or commands by simple string concatenation, just as it has seen in countless insecure examples online. The result is that “Little Bobby Tables” (from the famous xkcd comic) is alive and well in vibe-coded apps, ready to drop your database tables or worse.

The famous “Little Bobby Tables” XKCD comic, where a school’s database gets wiped because no one sanitized the input “Robert‘); DROP TABLE Students;–”. Always sanitize inputs! (Source: xkcd.com)

Consider an AI-generated snippet like:

# Insecure example:

name = request.args.get('name')

query = f"SELECT * FROM users WHERE name = '{name}'"

db.execute(query) Looks innocent, right? It works… for normal names. But if an attacker sets name to Robert'); DROP TABLE users;--, that string gets pasted right into the SQL query without any escaping. Boom, your users table is gone, as fast as you can say “it’s gone”. The AI didn’t intentionally write a bomb; it just followed the prompt to make a query, and didn’t know it should escape or parameterize it. Studies show that this is the most common security flaw in AI-generated code, omissions in input validation leading to injection vulnerabilities (csoonline.com & sdtimes.com). In one analysis, large language models failed to properly secure code against cross-site scripting 86% of the time! That means if there was a place to inject a <script>alert('XSS')</script> in a web page, the AI’s code probably left it wide open for the taking.

Sidenote, the new SQLi from 2005 is now the missing RLS (Row Level Security) and exposed JWT tokens in Supabase projects, that is worth a whole other article.

Back on track… how do we keep Little Bobby Tables and his friends out? By diligently sanitizing, validating, and using safe patterns for any code that handles user input. Here’s how to do that when working with AI:

-

Use Parameterized Queries and Safe APIs: This is the golden rule for preventing SQL injection (and similar injection in NoSQL or OS commands). When prompting the AI or reviewing its output, ensure it’s using placeholders or parameter binding rather than string concatenation. For example, in Python with something like psycopg2, you’d want

cursor.execute("SELECT * FROM users WHERE name = %s", (name,))instead of building the string yourself. If the AI doesn’t do this by itself, explicitly prompt: “Use a parameterized query in the example.” The same goes for other languages and databases (prepared statements in Java JDBC, etc.). Never accept an AI answer that just slaps input into a query string. -

Validate Input Rigorously: Ideally, your AI-generated code should include checks on the format and content of inputs, but it often won’t unless asked. You can prompt it: “Include input validation.” For instance, if

nameshould only be alphabetic, validate that and reject anything else before it ever touches a query. Even beyond type/format checks, consider length checks (to prevent ridiculously long inputs that could be malicious). As a rule of thumb: All input is evil until proven otherwise. If your AI assistant doesn’t treat it as such, you need to. -

Escape or Encode Outputs: In the context of HTML and XSS, any time you’re inserting user-provided data into a page, it must be properly escaped/encoded. AI might not automatically remember to use, say, templating auto-escape features or HTML-encoding on output. So remind it or add it yourself. For example, if using a templating engine via AI, ensure it’s one that auto-escapes by default (like Jinja2 in safe mode, etc.), or explicitly use escape functions for user data. Veracode’s research noted an abysmal success rate of AI preventing XSS, so never assume it did.

-

No Eval or System Calls on User Data: This goes for any language, do not execute user input as code. Sometimes AI, trying to be clever or succinct, might use dangerous functions. For example, Python’s

eval()orexec()might get used to, say, parse JSON or math expressions because it’s a common snippet online. That’s a huge no-no if that input isn’t trusted. Similarly, building a shell command string and calling it is risky. If you see the AI doing something likeos.system("rm " + user_input), you should hear alarm bells. Prompt the AI to find a safer method or do proper sanitization, or better, remove such patterns entirely. Never run eval on user data, one guideline plainly states to treat all AI-generated code as if it’s potentially malicious input itself. -

Leverage Framework Protections: Many frameworks and libraries have built-in mitigations for injection if you use them correctly. An AI might inadvertently bypass those if it isn’t aware. For instance, using an ORM (Object-Relational Mapper) usually helps avoid SQL injection (since it handles parameterization under the hood). If appropriate, ask the AI to use an ORM instead of raw queries. Or use high-level APIs (like

subprocess.runwith an array of arguments in Python, which avoids shell injection, rather thanos.system). By steering the AI toward these safer interfaces, you reduce the risk of injection bugs.

Remember the motto: never trust user input. Your AI coding buddy doesn’t inherently have paranoia about inputs, you have to inject that paranoia yourself (pun intended). By insisting on parameterized queries, input validation, and avoiding dangerous functions, you can significantly reduce the risk of injection attacks in your vibe-coded project. You’ll sleep better at night, and Little Bobby Tables will have to find another database to drop.

3.6 Insecure Design: “What Could Possibly Go Wrong?”

Some vulnerabilities aren’t just low-level bugs, they stem from fundamental design decisions (or lack thereof). Insecure Design refers to flaws in the overall architecture and logic of the application. It’s when developers didn’t consider how features could be abused or how failures could happen. Classic examples: not imposing any limits on what users can do (so someone uploads a 100GB file and crashes your server, or spams password reset requests to annoy users), or a workflow that fails to account for a malicious actor’s behavior. These are the kinds of issues you catch (and prevent) by doing threat modeling and asking “OK, what could go wrong if someone tries to misuse this feature?” Something AI won’t inherently do unless explicitly told.

AI-generated code tends to lack imagination when it comes to misuse cases. The AI is focused on making the app do what you asked, under normal conditions. It doesn’t know your business context or the potential abuse scenarios. One expert quipped that the AI “doesn’t know your app is handling medical records or that a certain endpoint is hammered by bots”, so it happily creates an endpoint with no rate limiting, no authorization, no audit logging (medium.com). From the AI’s perspective, if it works for the one user in the example, it’s done. The result is often an app that functions perfectly in ideal conditions but has zero resilience against malicious use or even unintended edge cases.

For example, imagine you prompt an AI: “Design a feedback form feature.” It gives you a form and stores feedback in a database. Great. But the AI probably didn’t consider adding a rate limit or CAPTCHA, so an attacker could spam it millions of times (Denial of Service). Or it didn’t think to limit feedback message length, so someone submits a 10-million character message and your memory blows up. Or maybe it allowed file attachments for screenshots but didn’t restrict file types or scan them, allowing malware uploads. These aren’t “bugs” in the narrow sense, they’re design oversights. Indeed, research found that LLMs often struggle with things like DoS protection or permission logic because those require understanding context and doing “what-if” analysis.

How do we mitigate insecure design when using AI? In short, we must inject human foresight into the process:

-

Explicitly Ask “What Could Go Wrong?”: After the AI produces a design or code, it can be valuable to ask the AI (or yourself) about potential abuses. For instance, prompt: “Review the above code for security and performance issues.” Sometimes the AI can identify, in a second pass, things like “no rate limiting present” or “this could allow large uploads”. It might not catch everything, but it’s a good practice. Likewise, ask “How can we make this more robust against misuse?” You’ll be surprised, the AI might then suggest, say, adding size checks or using a queue for that background job, etc.

-

Apply Limits Everywhere: A secure design assumes nothing is infinite or infallible. So, put limits on user actions: maximum request sizes, maximum requests per minute, maximum items a user can create, etc.(owasp.org). If you’re generating code with AI, include in your prompt the constraints: e.g., “The upload endpoint should reject files over 5MB” or “Add a rate limit of 5 requests per minute to this API.” If you didn’t specify, the AI certainly won’t magically add those. It’s on us to build in those guardrails.

-

Use Secure Design Patterns: Rely on known good practices in design. For example, use centralized validation and centralized error handling (so nothing slips through the cracks), design workflows to “fail secure” (if something goes wrong, default to a safe state, not an open one). The AI might not automatically do fail-safe design unless prompted. A concrete example: if you ask for a “password reset feature,” also specify “and it should invalidate the old reset link if a new one is generated, and have a short expiration time.” Without that, an AI might generate a simplistic design where any valid reset link works forever (insecure design). Always think: how could an attacker exploit this flow? Then adjust the design accordingly.

-

Threat Modeling and Human Review: There’s really no substitute for human brainstorming of abuse cases. Take whatever feature or code the AI gave you, and spend time imagining you’re the attacker. If you find “if I do X repeatedly or in parallel, bad things happen”, that’s an insecure design issue to fix. Then you can either fix it manually or prompt the AI to, e.g., “Modify the design to account for X abuse.” Make threat modeling a habit for each new feature the AI helps you build.

-

Keep Security Requirements in the Prompt: If you have an established security requirement (like “users should not be able to spam actions” or “financial transactions must be atomic and logged”), mention these up front to the AI. For example: “Create a message board post feature, with a limit of 3 posts per minute per user, and reject any posts over 500 characters.” The initial prompt can bake in some of these design constraints so the AI knows to include them.

3.7 Authentication Failures: The Broken Lock on the Login Door

Authentication is the process of verifying who a user is (think login, API tokens, etc.), and it’s obviously critical for security. Authentication Failures happen when that process is implemented poorly, allowing attackers to assume other users’ identities. In practice, this could mean weak password handling, no protection against brute force, session mishandling, or home-grown auth that has logical bugs. AI-generated code has a knack for creating these pitfalls if you’re not careful. Why? Because secure authentication is hard and full of subtle requirements, and most code out in the wild doesn’t implement all of them, which is exactly what the AI has learned from.

For instance, if you ask an AI to “create a login form and verify user credentials,” it might do exactly that, but omit things like rate limiting login attempts or hashing the passwords correctly. In fact, it might even store passwords in plain or use an outdated hashing method (as we discussed in Cryptographic Failures). Or consider account lockout: the AI likely won’t remember to add a lockout after, say, 5 failed attempts, unless told. As a result, an attacker could try thousands of passwords on an account (a brute-force attack) and the AI-coded app would happily let them keep guessing, essentially no lock on the door. One study observed that AI implementations of auth often lacked rate limiting because so much example code omits it. It’s a common oversight that can have dire consequences (like someone actually guessing a weak password, or just hammering your server).

Another common AI-auth mistake: rolling its own authentication in a flimsy way. For example, instead of using a framework’s secure login module, the AI might craft a custom login check because the prompt was generic. That custom check might not handle things like timing attacks or might use insecure comparisons. A security researcher noted seeing AI-generated code that compared session tokens using regular string equality, which can inadvertently leak info via timing differences. It’s an esoteric issue (constant-time comparison is needed to thwart that), but it shows how even when it looks okay, AI code might have under-the-hood auth weaknesses.

**

So, how do we fix and fortify authentication in vibe-coded applications?

-

Use Established Auth Libraries/Services: This is the number-one tip. Don’t let the AI reinvent the wheel for logins if you can help it. Prompt it to use the standard framework mechanisms. For example: “Use Devise for authentication” (if Rails), or “Leverage Passport.js middleware for login”. These libraries have already handled things like password hashing, timing attacks, etc. If you’re working in a language without a de facto auth library, consider using OAuth or OpenID Connect providers (which offload auth to a known-good system). The AI can help integrate those if you ask. The key is to avoid a custom “DIY” auth system, because those are bound to have cracks.

-

Enforce Strong Password Practices: Ensure the AI implements proper password hashing (again, see Crypto Failures, e.g., use BCrypt with a salt, not MD5). Also, consider password complexity and multi-factor auth, although those often involve more design (AI can scaffold 2FA setup if prompted, but you need to guide it). The point is, don’t accept an AI output that stores or checks passwords in an insecure way. You might prompt: “Make sure to hash passwords and never store them in plaintext.” Also, use secure password comparison functions (many frameworks have this built in to avoid timing leaks).

-

Add Brute-force Protection: Always think “what if someone tries 1000 passwords?” Your login function should throttle or lock out. If the AI doesn’t include it, you can prompt for it: “Add rate limiting to the login (e.g., at most 5 attempts per 15 minutes per IP/user).” Or use external tools, e.g., if using Django, mention “use Django’s built-in auth and consider django-axes for lockout after failures.” There are also CAPTCHA services, etc., but those might be beyond what we want to get from AI (and captchas can hurt user experience). At minimum, a simple delay or counter in code is better than nothing. Any AI-generated auth without rate limiting is incomplete, fix that before deploying.

-

Secure Session Management: Authentication isn’t just the login; it’s also maintaining the session or token after login. Make sure cookies are marked Secure/HttpOnly and maybe SameSite. If the AI sets a session cookie, double-check those flags (you may need to modify config for it). If using JWTs or API tokens, ensure they are random, sufficiently long, and have expiration. The AI might generate a token that’s predictable (like an MD5 of username+timestamp, not great). Instead, use a proper secure random generator. Prompt example: “Generate a secure random token for the session (e.g., 256-bit random).” Also, ensure the AI implements logout properly (invalidating sessions) and perhaps token revocation if using tokens.

-

Don’t Expose Verbose Auth Errors: This is minor but important, when a login fails, the user (and thus an attacker) should not learn whether it was the username or password that was wrong (else they can enumerate usernames). AI might by default print messages like “Username not found” or “Password incorrect.” It’s better to use a generic “Invalid credentials” message for both. This might not come out of the box from the AI’s code, so adjust that.

-

Test the Edges: After integrating auth, test things like: Can I bypass login by simply skipping it (e.g., accessing a protected page directly)? The AI might not have wired up the checks on every route. Test session timeout (if you remove cookies, does it properly block access?). Try some common tricks like SQL injection in login (if it built a query insecurely, though if you followed injection advice, that should be handled). Essentially, verify that the “lock” actually works and doesn’t jiggle open under pressure.

In summary, treat authentication as a first-class concern, not an afterthought. A secure vibe-coded app should handle auth with as much care as the core functionality, if not more. Many of the nasty auth bugs (like unlimited login attempts or weak password storage) can be eliminated by using well-known libraries and explicitly instructing the AI to include security features. The mantra here: never roll your own crypto or auth if you can avoid it, and if you do (even with AI’s help), be extremely thorough in reviewing it. With the right guidance, your AI helper can set up a decent auth system, but it’s up to you to ensure that system isn’t built on sand.**

3.8 Software/Data Integrity Failures: Trusting the Untrustworthy

Software and Data Integrity Failures are all about trust, or rather, misplaced trust. This category involves scenarios where the system accepts software updates, plugins, or data without verifying their integrity. It’s like blindly trusting a stranger’s USB stick labeled “Totally Harmless Updates” and plugging it into your server. In an application context, this often means things like: insecure deserialization (loading data or objects from an untrusted source in a way that can execute code), not verifying digital signatures on updates or packages, or generally assuming data hasn’t been tampered with when it could have been (owasp.org).

AI-generated code, if not guided, might introduce integrity issues simply because it doesn’t consider those trust boundaries. For example, suppose you have the AI help you build a feature that periodically fetches JSON data from a URL and updates your database with it. Did it include a step to verify that JSON’s source or signature? Likely not, unless you specifically asked. It will just retrieve and use the data. What if someone hijacks that URL or alters the data in transit? Your app would blindly trust it, possibly leading to corrupted or malicious data being processed. Another scenario: the AI might use a serialization library to save user session state or objects between requests. If that serialized blob isn’t protected, an attacker could modify it (since it’s coming from the user side) and, say, flip an isAdmin flag or inject data to exploit a known flaw in the deserialization process (owasp.org). There have been real exploits exactly like this (e.g., Java deserialization vulnerabilities) where unsanitized data led to remote code execution.

**

So how do we maintain integrity in our vibe-coded apps? The key is to verify and guard anything that crosses trust boundaries:

-

Validate and Verify Critical Data: If your application is pulling in code or data from an external source (package repositories, APIs, webhooks, etc.), treat it with skepticism. Use checksums, signatures, or at least sanity checks. For example, if the AI helps set up an auto-update mechanism (maybe downloading some plugin code), ensure it verifies a digital signature or uses a secure channel. Prompt the AI: “Include verification of the update’s signature using a public key.” If it’s beyond the AI’s capability, you know to implement it yourself. The OWASP guideline is clear: use digital signatures or similar to verify software and data from external sources.

-

Lock Down Deserialization: If your app needs to serialize/deserialize data (especially user-supplied), be very cautious. Avoid native binary serialization of whole objects if possible; prefer safer data formats like JSON or XML with strict schemas. If the AI code uses something like Python’s

pickleor Java’s default object serialization on untrusted data, that’s a huge red flag, those can execute code. Instead, prompt for or implement a safer method (e.g., JSON with explicit allowed fields, or use libraries that have secure deserialization modes). At minimum, ensure the data includes some integrity check (like an HMAC or signature) so you can detect tampering. For instance, if you must accept a serialized blob from a client, sign it on the server when issuing it, and verify that signature when getting it back. -

Restrict Functionality from Untrusted Sources: If the AI suggests using a plugin or including a script from a third-party site (perhaps to implement some feature), consider the implications. Loading third-party scripts (like a support chat widget) can potentially access your cookies or sensitive data if not sandboxed. You might need to use subresource integrity (SRI) for scripts, or better, self-host them if you trust them. In general, minimize code execution from sources you don’t fully trust. This ties back to Supply Chain, but extends to runtime behavior too.

-

Secure Your CI/CD Pipeline: This is more dev-opsy, but worth noting: if you used AI to set up deployment scripts or build pipelines, ensure those pipelines themselves are secured. An insecure CI/CD (e.g., pulling from an unverified artifact repository, or credentials stored in plain text in pipeline config) can inject bad code into your product. Make sure your AI-generated deployment scripts use secure channels (HTTPS for artifact fetching, etc.) and that any secrets in the pipeline are handled properly (e.g., use your CI’s secret vault, not hardcoded).

-

Fail Safe on Errors: Sometimes a data integrity issue might manifest as an error (e.g., signature check fails, or data format is unexpected). Ensure the AI code doesn’t have a “fail open” approach. For instance, if an update’s signature doesn’t match, the code should not proceed to apply the update (obvious, but bugs happen). If a JSON payload doesn’t parse to expected fields, don’t just catch the exception and continue as if all is fine, handle it (log it, alert, and skip processing it). Basically, detect integrity issues and stop the process rather than ignoring errors or, worse, using data that failed validation. You might need to add these checks if the AI didn’t: e.g., an

if (!signatureValid) { reject/update; }kind of logic.

In short, establish trust boundaries. Decide which inputs and components you inherently trust (very few, ideally just your own code and known libraries) and which you don’t. For those that you don’t, apply verification, sandboxing, and caution. AI won’t naturally insert those trust boundaries; it’s up to us to say, “Hang on, can this data/source be spoofed or malicious?” and then implement the appropriate safeguards. If you handle this well, you can prevent a situation where your AI-coded application becomes the victim of a sneaky integrity attack.

3.9 Logging & Monitoring Failures: Out of Sight, Out of Mind

You can’t fix or respond to what you don’t know about. Logging & Monitoring Failures refer to not recording important events or not keeping an eye on your system’s activities, which means attacks and anomalies can go undetected. It also covers logging improperly, like logging too much sensitive info or logging in insecure places, but the main point is a lack of visibility. In vibe-coded apps, this is a double-edged issue: on one hand, the AI might not include any logging or alerting at all (most tutorial code doesn’t focus on that), and on the other hand, if it does log, it might do so in a naive way (like printing secrets to console or browser). Both are problematic.

Imagine someone is quietly trying to break into your AI-generated web app, maybe they’re testing common URLs, trying SQL injection strings, or repeatedly failing login attempts. If you have no logging or monitoring, you’ll never know until it’s too late. Unfortunately, AI seldom adds logging for security events unless asked. The code will function, but there might be no record of what users (or attackers) are doing. A cybersecurity analyst noted that in incident response, vibe-coded apps often show an “absence of logging”, it’s one of those telltale signs of an informally developed system (csoonline.com). Essentially, the app was built to work, but not to tell on bad guys.

On the flip side, we’ve seen AI code that does log or print info, but in the wrong place. For example, an AI might leave verbose error logging on the client-side (e.g., sending full error details to the user’s browser console). This is dangerous because those logs can include stack traces, internal API URLs, or even secret keys/tokens if the error involved those. Logging should be done securely on the server, and sensitive data should be sanitized or omitted. Veracode’s study even highlighted “log injection” issues not being handled by AI in 88% of cases (sdtimes.com). Log injection is when an attacker manipulates input so that log entries are forged or corrupted (imagine an attacker includes newline characters or fake log data in their input to mislead your log files). If AI hasn’t guarded against that, it could make your logs unreliable or help an attacker cover their tracks.

So how do we shine a light on our AI-generated app’s activities without leaking sensitive info? Some guidelines:

-

Identify Key Events to Log: Not everything needs logging, but security-relevant events definitely do. These include: authentication events (logins, logouts, failed login attempts), access control violations, significant data changes, and obviously any error/exception (which might indicate something malicious if it’s an odd error). When using AI, you can prompt for adding logging like: “Log failed login attempts with username and IP address” or “Log whenever an admin function is called, including who called it.” You’ll likely need to wire this in, as AI might not do it by default. Having these logs means if something fishy happens, you have breadcrumbs to follow.

-

Use a Centralized Logging Approach: Rather than scattering

print()statements or console logs (which the AI might do in examples), use a proper logging framework or service. For instance, in Python use theloggingmodule and set an appropriate level and output (file, syslog, etc.), or in Node use a logger like Winston. You can instruct the AI: “Use the XYZ logging library to record events.” Centralizing logs (and later aggregating them with tools like ELK stack or cloud monitoring services) will make it easier to monitor. The AI can at least scaffold the use of a logging library if prompted. -

Avoid Logging Sensitive Data: Ensure that your logs don’t become a vulnerability themselves. That means no passwords, no full credit card numbers, no personal info in logs. If the AI code logs requests or errors, double-check that it’s not inadvertently logging secrets. For example, if an error object includes a database password in a stacktrace, don’t log that raw; strip it out. Mask or hash things if necessary (e.g., log only the last 4 digits of an identifier if needed for debugging, etc.). This might require manually adjusting what is logged, AI won’t know automatically what’s sensitive in your context.

-

Implement Alerts for Unusual Activity: Logging is step one, monitoring is step two. This might be beyond pure coding and more into DevOps, but it’s worth mentioning. If you have logs of, say, 50 failed logins in a minute for one account, someone should know about that. AI won’t set up an alerting system for you (unless you specifically integrate with one), but you can. Use tools or scripts to watch the logs for certain patterns (multiple failures, errors, etc.) and notify you (email, Slack, etc.). If you want to get fancy, you could even ask the AI to generate a simple monitoring script (it could output something using, say, fail2ban-like logic or a CloudWatch alert example if on AWS).

-

Protect Log Integrity: Since log injection is a risk, ensure that log entries can’t be spoofed easily. For example, if you log user input, consider adding delimiters or encoding to prevent someone from injecting fake newline characters that might break the log format. Many logging frameworks handle this or provide options to sanitize input. Also, restrict access to logs, only admins or system accounts should read them. If the AI spins up a quick logging solution, check file permissions or where it’s writing. The last thing you want is an attacker reading your logs (which might include clues to your system or even those semi-sensitive info like usernames).

-

Test Your Logging Setup: Do a quick run: trigger some bad login attempts or an error, and see if it shows up in the logs. Make sure the log contains useful info (timestamp, the event, maybe user ID or IP) without containing secrets. If something expected isn’t logged, adjust the code to log it. If something sensitive is logged, adjust to remove it. Essentially, treat your logging as another feature to be QA’d.

Good logging and monitoring is like having a security camera and alarm system for your application. AI might build you the house, but you have to install the cameras after. With proper logging, even if an attacker attempts something, you have a chance to catch on (or at least have forensic evidence after the fact). And with proper monitoring, you might stop an ongoing attack or fix a bug before it becomes a disaster. Don’t fly blind, wire in those lights and dials on your AI-crafted code so you can see what’s happening under the hood.

3.10 Mishandling of Exceptions: Spilling Secrets or Hiding Problems

Last but not least, let’s talk about how our AI-generated app handles the unexpected. Mishandling of Exceptions refers to poor error handling, either revealing too much when something goes wrong, or failing to handle the error at all (leading to undefined behavior or crashes). It’s a new entry in the OWASP Top 10 (as of 2025) (owasp.org), highlighting how important robust error handling is for security. In vibe-coded projects, we often see two extreme anti-patterns: the app either crashes and tells the whole world everything (stack traces with juicy details) or silently ignores errors and continues in a weird state. Both can be dangerous.

For example, suppose the AI wrote a piece of code to connect to a database and it fails. In a dev environment, printing the error (with the SQL query and maybe the DB credentials in the message) is common. If that happens in production and the app isn’t configured to catch it, the user might see an error page with all that info. Congratulations, you just leaked your database password and server file paths to any random user via an exception message. This is “spilling secrets.” A real-world case: some frameworks in debug mode will show a full stacktrace on error, if AI left debug on or you forgot to disable it (tying back to Misconfiguration), you could expose environment variables, source code, etc., whenever an error occurs.

On the flip side, what if the AI, trying to be helpful, caught an exception but then… just kept going without reporting it? For instance:

try {

// do something critical

} catch (e) {

// quietly ignore the error

}

continueExecution(); This is “hiding problems.” Maybe a crucial step failed (say, a payment processing), but the code ignored the exception and went on, so now the system is in an inconsistent state (the user thinks it succeeded because no error was shown, but actually it failed silently). Attackers can exploit this too, sometimes, if an error happens during an auth check and it’s swallowed, they might get access anyway (fail open). Or it can lead to data corruption because part of a transaction didn’t complete and wasn’t rolled back. Essentially, failing to handle exceptions correctly can open up all sorts of holes (logic flaws, security bypasses, even denial of service if the system keeps running in a bad state)(owasp.org).

To ensure our AI-coded app handles errors like a pro (and not a toddler with a jar of cookies), consider the following:

-

Use Global Exception Handling (and Customize It): Most frameworks have a global error handler or middleware. Use it. This allows you to catch any unexpected error in one place. In that handler, log the error (securely, as discussed) and return a generic friendly error message to the user. The AI might not set this up by itself, but you can prompt: “Include a global exception handler that returns a user-friendly error page.” And be sure to disable any default behavior that shows detailed error info. By having a centralized handler, you prevent leaks of stack traces to users and ensure the system doesn’t just crash unabated.

-

Fail Safe (Fail Closed): Design error handling such that if something goes wrong in a security-sensitive operation, the default is to deny or undo, not allow. For instance, if an exception happens during a permission check, don’t just catch it and assume “probably fine”, assume the user is not allowed until proven otherwise. If a multi-step process fails midway, roll back what was done (atomic transactions or compensating actions) (owasp.org). The AI won’t automatically add rollback logic; you might need to implement that or use transaction features of your framework. The key is to avoid “partial successes” or “fail open” scenarios.

-

Meaningful Handling, Not Hiding: It’s okay to catch exceptions, but do something with them. At minimum, log them (so you or an alert system know) and alert the user in a generic way. Do not just continue as if nothing happened. For example, if there’s an exception in processing a user’s order, don’t quietly move on, log it and maybe queue a retry or mark that order as failed. Make sure the AI’s tendency to catch-all and pass (if it did that) is replaced with real handling logic.

-

Avoid Overly Verbose Errors to Users: Users should get a polite error message like “Oops, something went wrong. Try again later.” They should not get “NullReferenceException at line 56 of File X: object foo was null… [and here’s my server path and query].” Check any error responses or pages the AI created. If it’s using a default error page, ensure in production mode it’s generic. If the AI prints out

e.messageto the client, that might be too much, perhaps remove that or gate it behind a debug flag that is off. -

Plan for Exceptional Conditions: Some errors are foreseeable (like a service being down, or a network timeout). Incorporate handling for those. For instance, if the AI calls an external API, what if that API times out? Perhaps implement a retry or return a degraded response. This isn’t strictly security, but robust error handling improves resilience (and some security, like preventing an outage from becoming a security incident due to weird behavior). Attackers often cause error conditions intentionally (like sending malformed input, or flooding a service to cause timeouts), how your app handles those can determine if they get an advantage. If you’ve coded (or prompted) in some graceful failure behavior, you’re ahead of the game. For example, adding timeouts and catches around external calls can prevent a simple DoS from freezing your entire app.

-

Monitor for Exceptions: This ties into Logging/Monitoring, make sure exceptions aren’t just handled and forgotten. They should be logged, and ideally, you should be notified if a new type of exception is popping up frequently. AI code might have subtle bugs that only show as exceptions under certain conditions; without monitoring, you might not realize users are hitting errors (they might just silently fail). So treat exceptions as things to track.

In essence, aim for an error handling strategy where users are kept in the dark about the gory details (attackers get no free info) and developers/admins are fully aware of issues (so you can fix them). AI will give you a skeleton, but you need to flesh it out with robust error management. If you do it right, you’ll turn those “Oh no!” moments into minor blips rather than full-blown security incidents or mystery bugs. And with that, we’ve covered the gamut of vulnerabilities and how to avoid them in the world of AI-assisted coding!

**

Chapter 4: Common Web Vulnerabilities (OWASP Top 10 Overview)

By now, it should be clear that using AI to speed up development doesn’t absolve us of security diligence, in fact, it demands more. The motto for secure vibe coding could well be: “Trust, but verify… and validate, encode, hash, log, limit, and monitor.” With a healthy dose of skepticism and the tips outlined above, you can harness AI’s productivity while keeping your application safe from the common flaws humans (and AIs trained by humans) tend to introduce. Happy (secure) coding!

If you read til the end, here is some extra nugget, paste this into your AI coding IDE and fix it until it comes out clear.

I want you to perform a full security review of my codebase using the OWASP Top 10 (2025 edition) (@https://owasp.org/Top10/2025/) as your framework. For each of the 10 vulnerability categories below, scan the codebase and identify relevant risks, insecure patterns, or omissions:

1. Broken Access Control (@https://owasp.org/Top10/2025/A01_2025-Broken_Access_Control/)

2. Security Misconfiguration (@https://owasp.org/Top10/2025/A02_2025-Security_Misconfiguration/)

3. Software Supply Chain Failures (@https://owasp.org/Top10/2025/A03_2025-Software_Supply_Chain_Failures/)

4. Cryptographic Failures (@https://owasp.org/Top10/2025/A04_2025-Cryptographic_Failures/)

5. Injection Attacks (@https://owasp.org/Top10/2025/A05_2025-Injection/)

6. Insecure Design (@https://owasp.org/Top10/2025/A06_2025-Insecure_Design/)

7. Authentication Failures (@https://owasp.org/Top10/2025/A07_2025-Authentication_Failures/)

8. Software/Data Integrity Failures (@https://owasp.org/Top10/2025/A08_2025-Software_or_Data_Integrity_Failures/)

9. Logging & Monitoring Failures (@https://owasp.org/Top10/2025/A09_2025-Security_Logging_and_Alerting_Failures/)

10. Mishandling of Exceptions (@https://owasp.org/Top10/2025/A10_2025-Mishandling_of_Exceptional_Conditions/)

For each category, follow this structure:

- **Issue #**: Describe the issue clearly and concisely.

- **Where**: Point to the exact file, line(s), or code snippet.

- **Why it’s a Problem**: Link it to the OWASP category and explain the risk.

- **Fix Recommendation**: Suggest a secure fix or refactoring approach.

- **(Optional)**: Provide example code to demonstrate the fix.

- **Priority**: Label as High / Medium / Low based on exploitability and impact.

Additional instructions:

- Flag any hardcoded secrets (e.g. API keys, tokens) or insecure uses of crypto libraries.

- Flag outdated or unverified third-party packages and dependencies.

- Highlight missing validation, lack of authentication, or risky default settings.

- Look for missing logging, silent failures, or error handling that could hide attacks.

- Use markdown formatting for clarity and readability.

Output format should be a structured audit report, organized under each OWASP Top 10 header.

Only analyze code; skip comments, README files, or unrelated assets. Be concise but technically accurate. Assume this audit will be reviewed by a developer familiar with the codebase. (

(